Segmentation

Image segmentation is the process of partitioning a digital image into multiple segments, sets of pixels, also known as image objects. The purpose of segmentation is to simplify or change an image’s representation into something more meaningful and easier to analyse.

Our ability to segment the region of interest is crucial for all of our image analysis projects, from DNA ploidy to Histotyping. Traditionally, we have depended on pathologists to identify the tumour in the tissue sample and to make the distinction between epithelium and stroma. Based on these assessments, we have prepared suspensions of isolated nuclei in order to be able to measure them accurately. A successful transition from traditional pathology via digital pathology to fully automated in silico pathology require new solutions to replace these tasks.

Tumour segmentation

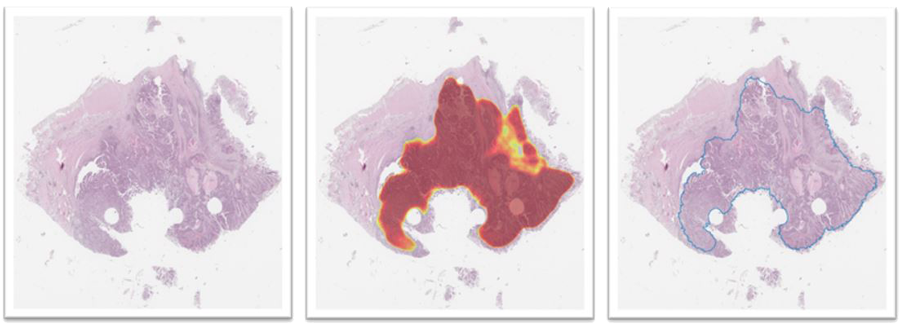

We are working with the segmentation of images of tissue sections into cancerous and non-cancerous regions. Tissue sections have been stained with routine haematoxylin and eosin and scanned with a microscopy scanner at so-called 40× magnification.

A method using deep neural networks and conditional random fields is already established and being utilised in Histotyping. This method has been shown to work sufficiently well in scans from colorectal cancer and lung adenocarcinomas. In both cases, the neural network and the postprocessing (with only minor differences) is the same. The main difference between the two is that the former is trained on scans from colorectal cancer and the latter on scans from lung cancer.

The model used in the Histotyping colorectal cancer project was applied to 100 consecutive cases from a prostate cancer cohort, and the results were unsatisfactory. A method for detailed segmentation and tissue classification in prostate cancer should be established and evaluated. The method used in colorectal cancer should be trained on defined training sets from prostate and lung, and its performance evaluated in test sets. The method’s performance should guide the need for refinement, and the possible need for trying a more up-to-date method.

A thorough review of the current state of the art segmentation methods is needed. Experiments should be defined, and their result should be reported in a manuscript.

Lung cancer

Materials and counts per December 16th, 2020. A subset of the Histotyping NSCLC development with available manual annotations is being used for tumour segmentation development. All Histotyping NSCLC development set scans are segmented by the selected and tested automatic segmentation model.

A preliminary segmentation round has been completed. This follows the initial Histotyping NSCLC project and is therefore trained on scans from 75% of patients with a distinct outcome, tuned on scans from patients with a non-distinct outcome, and tested on scans from 25% of patients with a distinct outcome (disjoint from the training group). Results are comparable with those from CRC, with a mean Informedness score of 0.864 (see Table 13).

This algorithm will be included as a part of the Histotyping NSCLC network.

Prostate cancer

Materials and counts are per December 16th, 2020. A subset of the Histotyping Prostate development with available manual annotations is being used for tumour segmentation development. All Histotyping Prostate development set scans are segmented by the selected and tested automatic segmentation model.

A preliminary segmentation round is almost completed. This follows the initial Histotyping Prostate project and is therefore trained on scans from 75% of patients with a distinct outcome, tuned on scans from patients with a non-distinct outcome, and tested on scans from 25% of patients with a distinct outcome (disjoint from the training group). Training and tuning have been completed. Test results are expected early in Q1, 2021.

A successful algorithm will be implemented in the Histotyping Prostate network.

Tumour Tissue Segmentation

In prostate cancer tissue imaging scans, regions have been carefully classified into tumour, benign epithelium and other tissue (see figure 19). In the colorectal and lung cancer projects, entire scans are annotated, and the result is approximate and not very detailed. The annotations mentioned above in the prostate are detailed and done on multiple so-called high-power fields. Also, a classification of the annotated tissue is conducted, instead of just a binary foreground-background annotation. This dataset will be the basis for a new CNN-based method for tissue segmentation, as well as a new method to create a training set for cell-type classification.

The dataset consists of 469 frames with approximately 14,000 tissue objects grouped into a training set or a test set. The tissue objects in the training set are from 15 good prognosis and 10 poor prognosis patients, and 14 good prognosis and 10 poor prognosis patients in the test set.

Nuclei Segmentation

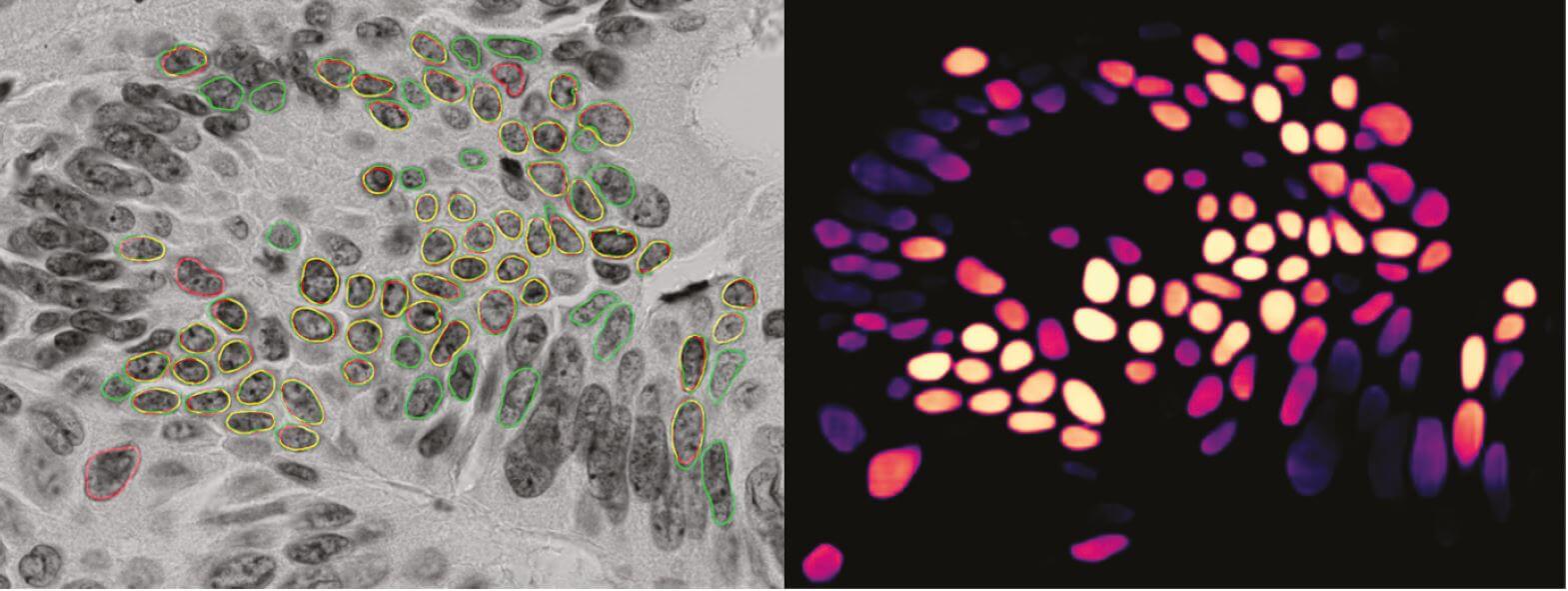

A method based on deep convolutional networks has been developed and works reasonably well for images of colorectal cancer. The main reason for this is that it is trained on hand-segmented images from colorectal cancer. The assumption was that a method performing well in colorectal cancer would also perform well in prostate cancer because they were assumed easier to segment. When the method trained on images from colorectal cancer was applied to prostate cancer images, the result was not satisfactory. The main problem is that too few nuclei are detected. Hence, a baseline model should be developed that works sufficiently well on prostate cancer images.

Images are in grayscale and sizes of 1040 × 1388 pixels. They image cancerous tissue stained with Feulgen staining and are captured at so-called 63× magnification (around 600x). The task is to segment images into foreground regions (cell nuclei) and background regions.

The first step is to establish a set of hand-annotated images that can be used for performance evaluation. We have hand-segmented images from about 200 patients, with about 2000 segmented nuclei from each. A partition, e.g., 50%, is to be used as a test set, and the remainder as a training set. A similar set-up was successfully established for colorectal cancer.

Three models have been developed and their performance evaluated on the test set of hand-segmented images from the prostate. All models use the same network, the one that we have already established, and differ only by the data they are trained on:

- Images from colorectal cancer

- Images from colorectal cancer and prostate cancer

- Images from prostate cancer

The first experiment serves to establish a baseline and should be used to get a quantitative understanding of the performance, relative to the performance in colorectal cancer. The model from the network trained on images from colorectal cancer and prostate cancer is expected to perform better in images from prostate cancer than the model originating from a network only trained on images from colorectal cancer. The performance of this model should also be evaluated on the colorectal test set. The final model should perform well on images from prostate, but it may not be as general as the model from a network trained on both colorectal and prostate. This is partly because there are relatively few training examples from the prostate. Refinement of the postprocessing method should be considered.

The current method segments the probability map images from the neural network and is designed to perform well for well-defined and isolated nuclei. It is not ideal on nuclei with weak probabilities and is also inefficient. As the method is intended to segment images from 63× magnification, it needs to be fast to make it usable for entire scans. A new postprocessing method taking the original image into account is implemented and will be evaluated during Q1, 2021.

The existing segmentation method is based on the implementation of an old network architecture (2016). It performs so-called semantic segmentation, which means that the segmentation method segments objects from different classes but does not differentiate between objects from the same class. This is fine in, e.g., tumour segmentation, but more problematic in nucleus segmentation as we are interested in differentiating nuclei. This can be problematic since nuclei tend to be relatively small and clustered.

A different approach is the so-called instance segmentation, where the segmentation method is also expected to differentiate between different instances within the same class. Since the first well-known attempt in 2017, these methods have seen more attention in segmentation of cell nuclei.

It is expected that instance segmentation methods will reduce the problem with clustered nuclei, a hard problem often left to the postprocessing. The method using instance segmentation is implemented, and a new segmentation performance evaluation framework has been applied, currently comprising all expected supervised evaluation methods based on pixel counts and object counts.